Using AI in Obics

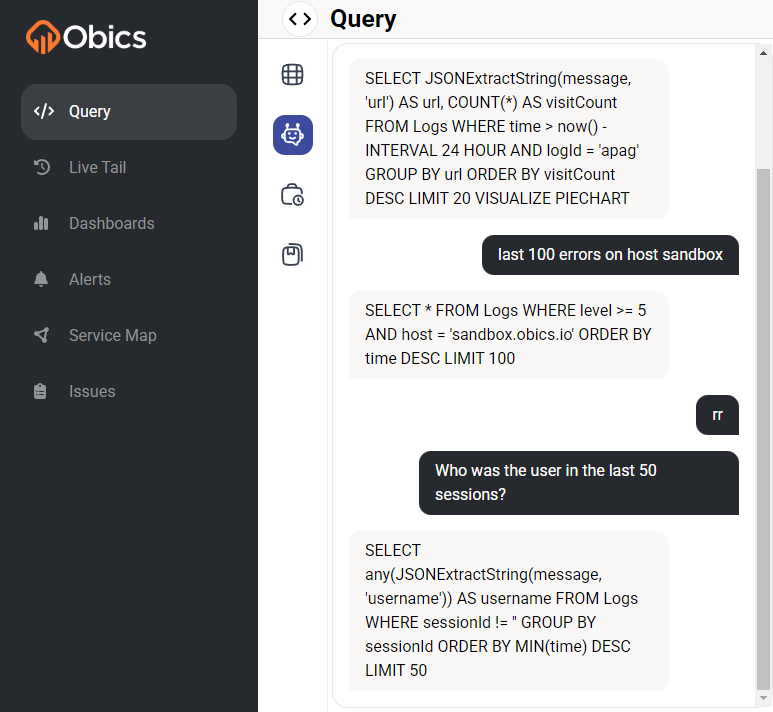

Obics uses an AI model trained on your own logs, metrics, and traces to allow you to ask questions in natural language. To use, simply open the AI Assistant tab in the "Query" page and ask away.

The assistant will always generate a fresh query from the latest prompt. It doesn't remember context from previous questions. In the near future, the assistant will save the context and be able to fix its queries according to a following prompt. Or add an explanation a query.

There are a few shortcuts for working with the assistant faster:

- Entering

rrin the chat input will insert the last query generated by the assistant into the bottom of the current tab and run it. - Entering

rin the chat input will insert the last query generated by the assistant into the bottom of the current tab without running it. - Entering

cin the chat input will copy the last query generated by the assistant to the clipboard.

How it works

The AI assistant is based on OpenAI's model 4o. It is fine-tuned with data from your logs, metrics, and traces. The fine-tuning process is a scheduled process that happens every once in a while, depending on the need.

LLMs are extremely good at writing SQL queries. Obics helps it further by

providing automatic basic training on your telemetry data. You should be getting good results without any configuration from the get-go.

However, there are many reasons why an AI won't always be able to generate correct queries. In order to help the assistant,

you can provide examples of "good answers". It's done by typing GA: in the chat input. This will open a dialog where you can write a question and a "good query" that answers that question.

It's especially useful providing "good answers" for a question which the assistant didn't answer correctly but where you were able to write a correct query yourself. By adding that as an example, there's a much better chance the assistant will be able to generate a good query next time you or someone else in your team asks a similar question.