By: Ron Miller | Published: April 14, 2025

5 LLMs, One Complex React Challenge: Who Built the Best Waterfall View?

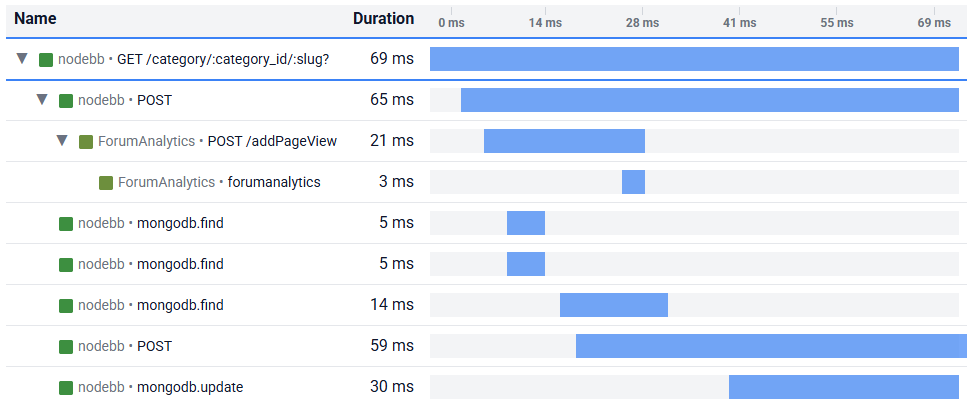

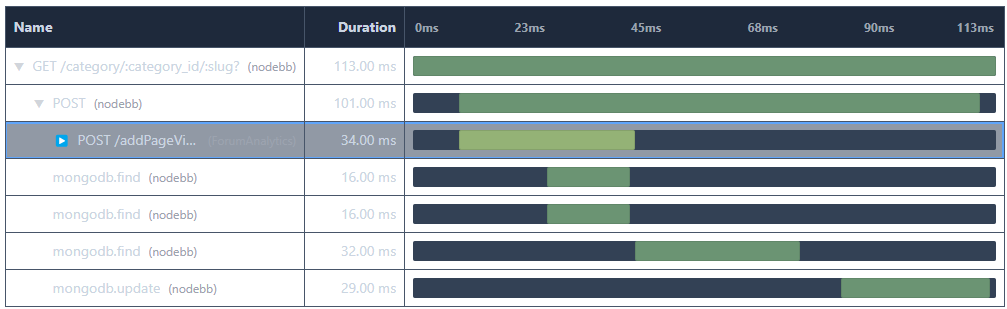

I continue to be amazed by the usefulness of LLMs, especially for software development. The biggest productivity boost for me, hands down, is creating UI components and pages. That work is time-consuming and not especially interesting, so I’m all too happy to let AI do it for me. As it happens, I had to create a non-trivial component. A waterfall view of Traces and Spans, something like this:

This seemed like a good opportunity to compare models. This component is not too easy, but not super complex either—something that should be able to challenge them but still be in the realm of possible. So let's do just that, starting with defining the rules of the game.

Setting up the comparison

The lineup will consist of: ChatGPT o1, DeepSeek, Claude 3.7 Sonnet, Gemini 2.5 Pro, and Vercel V0. Vercel V0 isn't an LLM per se, but as a user who wants to create React UI, it has the same offering as the others.

The plan is to send the same prompts to each LLM. The first task will be creating the initial view. The second task will be adding time markers in the waterfall column. The 3rd task will be adding keyboard navigation with up/down arrows and left/right arrows to expand/collapse. The 4th task will be adding a smooth transition to the expand & collapse actions.

Scoring is set according to the task each model succeeded in reaching and the number of “correction prompts” it took to get there. If tied, user experience of the development tool will be counted as well. The actual appearance of the waterfall in terms of colors or fonts is not taken into account.

I won’t be looking at the code at any point. This is a strictly “vibe coding” exercise; if there are errors, I’ll copy the error details and let the LLM fix the issue. If there’s bad UI, I’ll describe the problem and hopefully the model can correct it.

The prompts for each task:

- Initial structure:

Create a react component based on opentelemetry traces. It should show a table with 3 columns: Name, Duration, and Waterfall view. Each table in the row represents a span. The Name column shows the span_name. The duration is taken from the field "duration_ms". The waterfall column shows a rectangle placed in the relative space of the column according to the span's start and stop time. The spans are a tree where parent_span_id field is the span_id is the parent. The rows should be in the order starting from root span. The children will be placed directly under the parent. Each row that's a span with children should be able to be expanded and collapsed to show and hide children spans. An example input might be

[

{

"timestamp": "2025-03-27 11:21:28.387",

"service": "nodebb",

"span_kind": "Producer",

"method": "",

"url": "",

"span_name": "mongodb.update",

"span_id": "d09f3d6c1a049742",

"parent_span_id": "0f5622ba68b655f2",

"status_code": "Unset",

"duration_ms": 29

},

...

]

- Time markers:

Instead of the column title "Waterfall" add time markers showing duration from 0 to the max duration - Keyboard navigation:

Each row should be selectable and focusable on click. When selected or focused, it should have a border around the entire row. Pressing on arrow keys allows navigating between rows. Up and down goes up and down between rows as expected, skipping collapsed rows. Pressing on the right arrow key when focused on a row that has children but collapsed will expand that row. Pressing on left arrow key will collapse a row. - Transition:

Have the expand & collapse actions to be with a smooth transition

The Results

The result components, along with versions, are available on this page. Just note that since they're all on the same page, there are some quirks with keyboard navigation and scrolling. You can see the source code here.

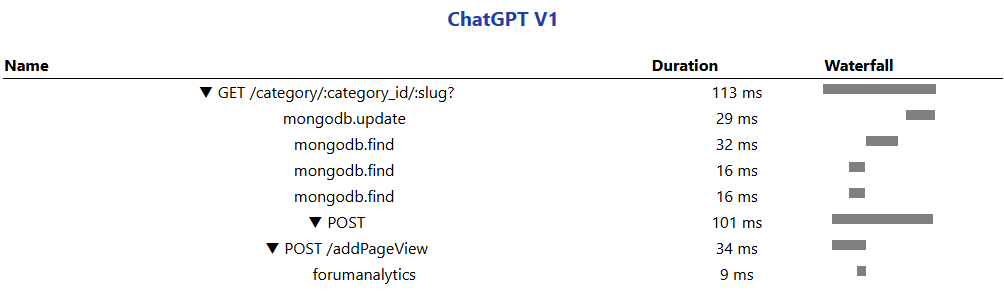

ChatGPT o1

The o1 model was able to create the basic structure of the waterfall view. However,the rows were in the wrong order, and the columns weren’t quite right.

I tried to do some prompting to correct this but without success. I even tried to ignore the columns and continue to the 2nd task of putting time markers, but that failed as well. ChatGPT was out of the race. ChatGPT Full Conversation.

DeepSeek

Every time I try DeepSeek, I’m impressed—and this time was no different. DeepSeek completed the first 3 tasks with the fewest prompts. I didn’t have to correct it once.

The first 3 tasks were: basic waterfall view, time markers, and keyboard navigation. On the 4th task, however, when I asked to make smooth transitions, the magic broke. DeepSeek's code that resulted in exceptions. I tried several prompts to fix it, but it seemed to have reached an unrecoverable state.

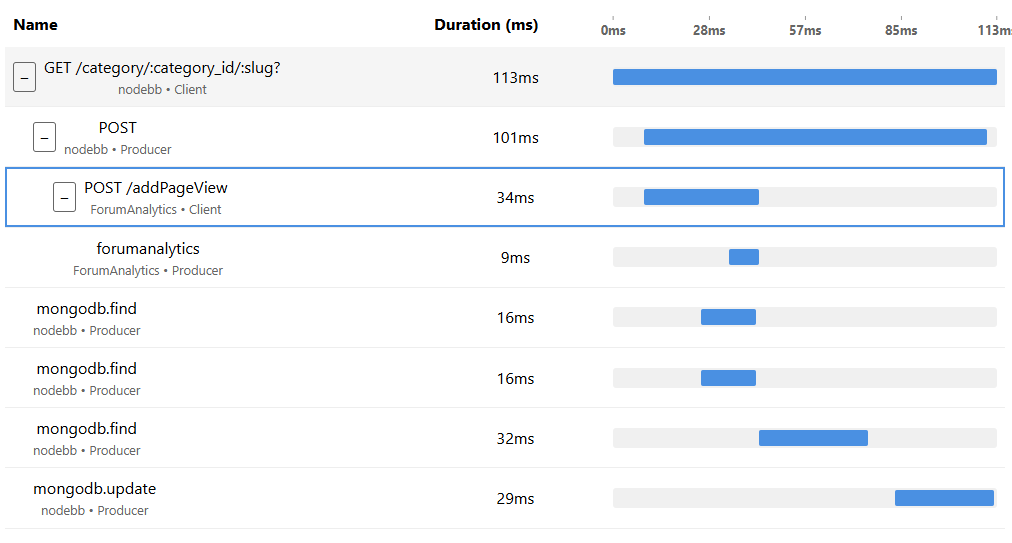

Claude Sonnet v3.7

Claude recently became my go-to model for programming tasks, and it performed very well on this as well. It was able to complete the first 3 tasks with just a single correction prompt.

The best part of creating React components with Claude is the developer experience. The generated UI is previewed alongside your chat, so you don’t need to use a test app to copy-paste the code. Kudos to Anthropic for that.

Claude failed on the 4th task of creating smooth transitions. Not only did the transitions not work, the columns got messed up to the point I couldn’t keep working with it. Claude Full Conversation

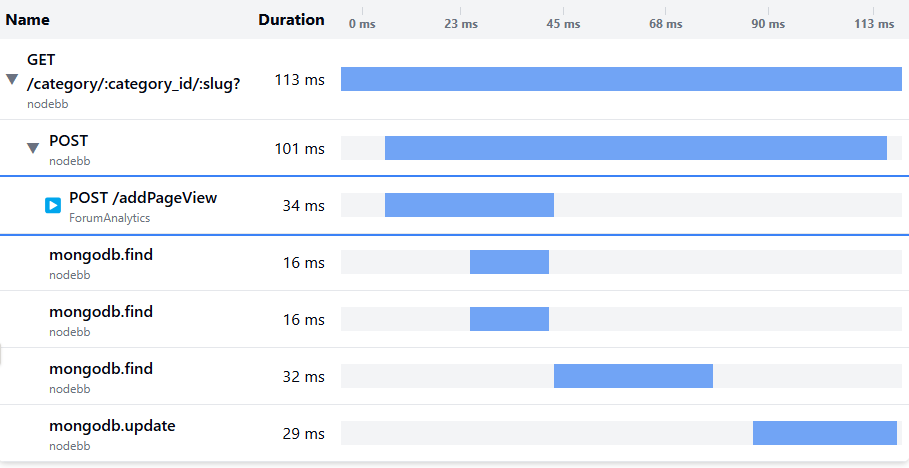

Gemini 2.5 Pro (experimental)

Gemini 2.5 Pro produced a good structure, albeit after a couple of correction prompts. Adding timeline markers worked, but also required correction prompts. Adding keyboard navigation resulted in exceptions, which I tried to fix with more prompts. This led to other exceptions, but after sticking with this “vibe coding” approach of copy-pasting the errors to Gemini, it eventually worked (to my surprise).

Trying to add transitions worked on first try but the column structure got messed up. I tried to fix it but then the transition stopped working. More prompts didn't help and Gemini was out of the race, almost reaching the final line. Full Conversation with Gemini

Vercel V0

Vercel V0 is not a model by itself. It's an LLM-based product that produces UI with “tailwindcss” and “shadcn” components. But I can argue that I wasn’t using the pure model with the others either. ChatGPT o1 is an app that uses the GPT o1 model, same as Claude is a web app that uses the Claude Sonnet v3.7 model. In any case, as a person who wants to create UI components, I consider Vercel V0 to be a valid alternative to the others.

Vercel V0 was able to get the job done—albeit there were several times where I had to use correction prompts. It got the feedback and was able to get back on its feet.

More impressively, Vercel V0 was the only tool that was able to complete all 4 tasks, along with smooth transitions for expanding and collapsing rows! It also provided a great user experience since (like Claude) it was able to preview the results on the fly.

Vercel V0 is free, by the way, up to 10 prompts per component. All 4 tasks were done in 9 prompts. V0 Full Conversation.

Final Ranking

The winner of this matchup is without doubt Vercel V0, which was the only one to complete all 4 tasks.

The full ranking is (from best to worst):

- 👑 Vercel V0 👑

- Claude Sonnet 3.7

- DeepSeek

- Gemini 2.5 Pro

- ChatGPT o1

Claude, DeepSeek, and Gemini performed more or less the same. They were able to complete the first 3 tasks but not the 4th. I gave Claude the 2nd place because it required very few correction prompts and because of the excellent developer experience. DeepSeek required even less prompts than Claude but broke pretty badly when trying to complete the 4th task. Gemini required quite a lot of correction prompts to get the first 3 tasks right.

My biggest surprise is the first and last places. I didn't expect Vercel V0 to perform the best and I certainly didn't expect ChatGPT o1 to be the worse. Could it be that the industry leader's models became subpar to its competitors? It's just a single test in a single niche, so you can be the judge of that.